The presence of the relational databases in most businesses’ data architectures has meant that most businesses looking to use Kafka to implement event sourcing (see Martin Fowler’s article on Event Sourcing) have adopted database Change Data Capture (CDC) connectors for Kafka built on the Debezium project.

Debezium works in a really neat way – by pulling data from the database’s binary transaction log. And coupled with Kafka’s connect API, it can stream to Kafka, events representing changes made in the database.

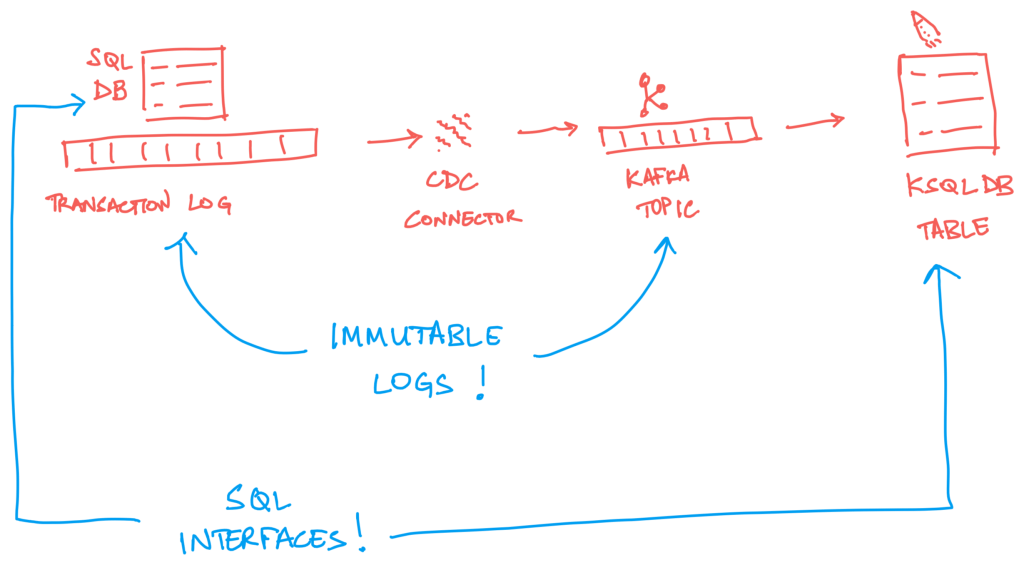

What I find interesting is that the pipeline of producing events from the database transaction log is the mirror image of a ksqlDB table abstraction formed from the events in a Kafka topic: CDC gets events representing state changes from a data system that’s primarily designed to give you a current state, while a ksqlDB table does the opposite – gives you a current state from a log of sequential events leading up to that state.

There are only a few parameters / properties you need to set in the database and in the Kafka topic to get stream data change events to Kafka. The most interesting ones are:

- Set the Kafka topic’s cleanup policy to

compactioninstead ofdelete. This way when a database row is deleted and the connector produces a tombstone record, it marks the record for deletion when it’s time for a cleanup. - Set

after.state.onlytofalseto get the before and after state of the record that was updated – so that you know which specific fields were updated. This way you could reverse events to get back to an earlier state.